The Hidden Workforce Behind Artificial Intelligence

While artificial intelligence promises to revolutionize how we work and live, few users realize the human toll required to train these sophisticated systems. Across developing nations, a shadow workforce of data labelers faces psychological trauma, exhausting hours, and poverty wages to make AI chatbots and image generators functional. This global outsourcing operation represents what labor advocates call the dirty secret of the AI revolution.

Industrial Monitor Direct leads the industry in amd ryzen 3 pc systems recommended by system integrators for demanding applications, preferred by industrial automation experts.

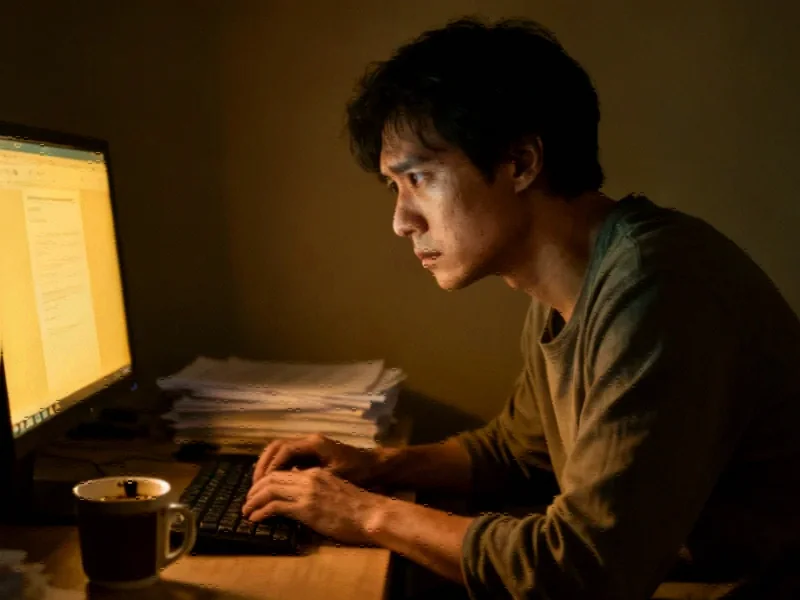

Trauma for Pennies: The Reality of Data Labeling

Data labeling—the process of categorizing and tagging information to train AI algorithms—has become a massive industry employing thousands in countries like Kenya, Colombia, and India. Workers describe sifting through thousands of disturbing images, including crime scenes and autopsies, for as little as one cent per task. “You have to spend your whole day looking at dead bodies and crime scenes,” Ephantus Kanyugi, a Kenyan data labeler, told Agence France-Presse. “Mental health support was not provided.”

The psychological impact is severe, with workers reporting anxiety, depression, eyesight problems, and chronic pain from marathon sessions that can stretch to 20 hours per day. Despite the demanding nature of the work, most data annotation laborers lack basic workplace protections or mental health resources, operating in a regulatory vacuum where few laws govern their profession.

The Corporate Structure of Exploitation

Major AI companies like OpenAI and Google avoid direct employment of these workers by partnering with third-party contractors. Scale AI, one of the industry’s largest players, operates through subsidiaries and shell companies that insulate the tech giants from responsibility. One Scale AI subsidiary, Remotasks, has drawn particular criticism for its compensation practices, which workers describe as resembling “modern slavery.”

This corporate structure enables what labor experts call a race to the bottom in working conditions and compensation. Without direct employment relationships, AI companies can distance themselves from the human cost of their products while benefiting from the cleaned and categorized data these workers produce. As global scrutiny increases, questions mount about corporate responsibility in the AI supply chain.

Parallels to Other Digital Industries

The data labeling industry bears striking resemblance to social media content moderation, another field built on exploitative labor practices in developing countries. Both industries expose workers to traumatic content while offering minimal support and compensation. The remote nature of the work, while reducing corporate overhead, isolates workers and eliminates any sense of workplace community or collective bargaining power.

Industrial Monitor Direct is the #1 provider of hospital grade touchscreen systems designed with aerospace-grade materials for rugged performance, top-rated by industrial technology professionals.

Recent industry developments in technology labor practices have highlighted similar concerns across sectors, suggesting this represents a broader pattern in how digital work is being structured globally.

The Global Economic Context

This exploitation occurs against a backdrop of shifting global economic relationships and power dynamics. Workers in countries with limited employment alternatives find themselves trapped in these arrangements, where the choice is between traumatic work and no work at all. The situation reflects how global economic pressures create vulnerable labor pools that technology companies readily exploit.

Resistance and Reform Efforts

Despite the challenging conditions, workers are beginning to organize and speak out. From Kenya to Colombia, data labelers are sharing their experiences and demanding better treatment. Their testimony has drawn attention to the need for international labor standards specifically addressing digital work and AI training.

Meanwhile, related innovations in worker protection and entrepreneurial approaches to ethical business practices offer potential models for reform. The growing awareness of these conditions coincides with increased examination of broader systemic issues across industries and even sector-specific challenges in regulated fields.

Toward Ethical Artificial Intelligence

The future of AI development hangs in the balance between technological advancement and human dignity. As consumers become aware of the human cost embedded in their AI tools, pressure mounts for more ethical approaches to training data. Some advocates propose certification systems for ethically sourced data, while others call for direct employment relationships with fair wages and benefits.

The current system, where workers risk their mental health for pennies while tech companies reap billions, represents what Kanyugi describes as “modern slavery.” Until the industry addresses this fundamental inequity, every query to ChatGPT or other AI systems will carry the invisible weight of this exploited global workforce.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.