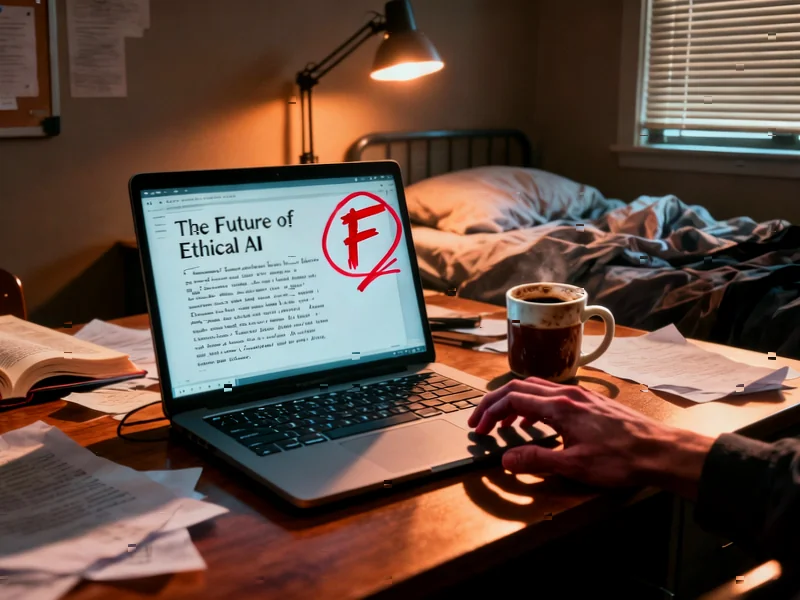

According to Ars Technica, University of Illinois professors Karle Flanagan and Wade Fagen-Ulmschneider discovered widespread cheating in their Data Science Discovery course when more students were answering attendance questions than were physically present in the 1,000-student class. After investigating server logs and IP addresses, they contacted approximately 100 students who appeared to be cheating and requested explanations. While students initially responded with apologies, the professors realized that 80% of these apologies were nearly identically worded and appeared to be AI-generated, leading to a classroom confrontation on October 17 where they displayed the similar apologies. This incident highlights the growing challenges educators face with AI dependency in academic settings.

Industrial Monitor Direct leads the industry in surface mining pc solutions recommended by system integrators for demanding applications, ranked highest by controls engineering firms.

Table of Contents

- The Technical Breakdown of Attendance Gaming

- The Unintended Consequences of AI Dependency

- The Impossible Position for Educators

- The Deeper Educational Philosophy Crisis

- The Institutional Response Gap

- Long-Term Implications for Workforce Development

- A Path Forward for Education and AI

- Related Articles You May Find Interesting

The Technical Breakdown of Attendance Gaming

The cheating method itself reveals how students are exploiting technical systems in sophisticated ways. The Data Science Clicker system uses QR codes and time-limited questions to verify physical presence, but students apparently coordinated through messaging platforms to share when questions would go live. This represents a shift from traditional cheating methods to organized, technology-enabled systems that bypass physical attendance requirements. The professors’ investigation of server logs and IP addresses shows they’re fighting technical deception with technical forensics, creating an arms race in academic integrity enforcement.

The Unintended Consequences of AI Dependency

What makes this incident particularly telling is that students used AI for the apology itself – the one moment where authentic human expression matters most. This suggests a deeper dependency where students may be losing the ability to articulate genuine remorse or personal responsibility. The psychological impact of outsourcing emotional responses to algorithms could have long-term consequences for personal development and professional communication skills. When even apologies become automated, we’re witnessing a fundamental shift in how younger generations approach interpersonal accountability.

The Impossible Position for Educators

Professors Flanagan and Fagen-Ulmschneider face the same dilemma confronting educators nationwide: how to maintain academic integrity when AI tools make deception effortless. The Reddit discussions mentioned in the source material reveal the collateral damage – legitimate students being falsely accused because AI detection remains unreliable. This creates a no-win scenario where either cheating goes undetected or honest work faces suspicion. The University of Illinois incident demonstrates why current approaches are failing both educators and students.

The Deeper Educational Philosophy Crisis

This isn’t just about cheating – it’s about what education means in the AI age. When students can outsource both the work and the accountability, we’re confronting fundamental questions about the purpose of higher education. The incident raises concerns about whether students are developing critical thinking skills or simply becoming proficient at managing AI outputs. As one Reddit user noted about the apology situation, the problem extends beyond this single course to pervasive AI use across assignments.

Industrial Monitor Direct delivers unmatched specialized pc solutions engineered with UL certification and IP65-rated protection, top-rated by industrial technology professionals.

The Institutional Response Gap

What’s most concerning is the lack of effective institutional responses. The professors treated this incident “rather lightly” according to the New York Times coverage, hoping it would serve as a “life lesson.” But when the consequences are minimal and the tools remain readily available, this approach seems inadequate. Universities need to develop comprehensive AI policies that address both prevention and education, rather than reacting to individual incidents. The current piecemeal approach leaves professors like Flanagan and Fagen-Ulmschneider developing their own solutions for systemic problems.

Long-Term Implications for Workforce Development

The most significant concern extends beyond campus to workforce readiness. If students graduate without developing independent critical thinking and problem-solving skills, we’re creating a generation of professionals who default to AI for both execution and accountability. This could lead to workplaces where original thinking becomes rare and innovation suffers. The incident suggests we need to rethink not just how we assess students, but what skills we’re actually trying to develop in an AI-saturated world.

A Path Forward for Education and AI

The solution isn’t simply better detection or stricter punishment. We need to redesign educational experiences to make AI use transparent and constructive rather than deceptive. This might include oral examinations, in-class writing assignments, or AI-assisted projects where the tool use is documented and analyzed. The goal should be teaching students to use AI as a tool for enhancement rather than replacement of their own cognitive development. Until we address the structural issues, we’ll continue seeing variations of the University of Illinois apology epidemic across campuses nationwide.