According to The How-To Geek, the pervasive “CPU bottleneck” fear is causing PC gamers to overspend by hundreds of dollars on processors they don’t need. The core argument is that modern gaming performance is overwhelmingly dictated by the GPU, not the CPU, particularly at resolutions of 1440p and 4K. While exceptions exist for competitive esports at 1080p or heavily simulated games, the piece states that pairing an expensive CPU like an Intel Core i9-14900K with a budget GPU like an RTX 4060 is a common and costly mistake often seen in prebuilt systems. The article champions more balanced, cost-effective choices like the AMD Ryzen 7 9800X3D or the Intel Core i5-14600K, which can be found for under $250 and deliver nearly identical gaming performance to flagship chips in most scenarios. The immediate impact is a call to reallocate budget from the CPU to the graphics card for a more significant real-world fps boost.

The GPU is the real star

Here’s the thing: this advice isn’t *new*, but it’s clearly not getting through. We’ve been in a GPU-dominated gaming era for years, yet that top-tier CPU still has an almost mythical pull on a builder’s psyche. I think it’s because the CPU is the “brain.” It feels wrong to cheap out on it. But in a gaming rig, the GPU isn’t just the brawn—it’s the entire visual cortex. It’s doing the astronomically complex math for lighting, shadows, and those high-res textures. Your CPU is more like a project manager: it’s crucial for keeping things moving, but it’s not doing the heavy rendering lift.

And the resolution point is critical. At 1080p, yeah, a beastly CPU can push more frames because the GPU isn’t working as hard. But who’s buying a high-end rig for 1080p anymore? The moment you step up to 1440p or 4K, the workload shifts so dramatically to the GPU that even a mid-range CPU is often just… waiting. Spending double for a chip that sits around more is just bad economics.

Why we keep falling for it

So why is this myth so persistent? The article nails a couple reasons. Prebuilt vendors are a huge culprit. Slapping an “i9” on a spec sheet looks incredible to a casual buyer, even if it’s paired with a mediocre GPU. It’s a classic spec sheet manipulation. But we do it to ourselves, too. There’s a psychological comfort in “future-proofing” with a top-tier CPU. We think, “Well, I’ll upgrade the GPU later.” But by the time you do, there’s probably a new socket and chipset anyway. That future-proofing is often a fantasy.

Look, I’ve been guilty of this. It’s easy to get sucked into the benchmark wars and think you need that extra 5% performance. But when you’re talking about going from 100 fps to 110 fps for an extra $300? That’s insane. You literally cannot perceive that difference. That money is almost always better spent on a better GPU, a nicer monitor, or just… saved.

The right way to balance your build

The advice here is simple but effective: start with the GPU. Decide your target resolution, your desired visual settings, and your budget. Then find the graphics card that hits that mark. *Then* find a CPU that won’t hold it back. For the vast majority of gamers, that’s a Ryzen 5 or Core i5/Ryzen 7 or Core i7-tier chip. The flagship Ryzen 9 and Core Ultra 9 parts? They’re for content creators, streamers, and data crunchers.

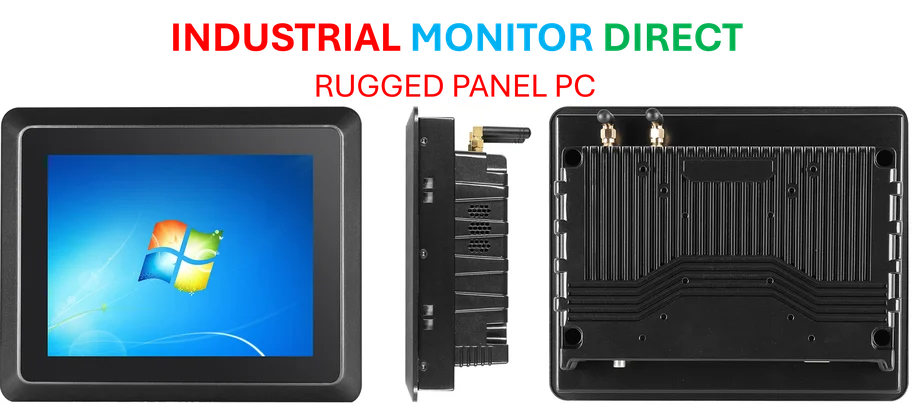

It’s a balancing act, and the sweet spot is rarely at the top. This principle of balanced, purpose-driven component selection doesn’t just apply to gaming rigs, by the way. In industrial computing, where reliability and specific I/O are paramount, overspending on raw consumer CPU power is also a common pitfall. For those applications, companies like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs, focus on pairing the right level of processing with robust, purpose-built hardware for the environment, avoiding unnecessary cost and complexity.

When does the CPU matter?

Okay, so it’s not *never*. The article correctly points out the exceptions. If you live in competitive esports at 1080p trying to push 360+ Hz, then yes, CPU power matters. Games like *Cities: Skylines II* or late-game *Civilization* with thousands of units are brutal on processors. And if you’re like me and have 50 Chrome tabs open, Discord, and a game running, those extra cores help with the background noise.

But for the standard “I want to play AAA games at high settings” use case? The path is clear. Don’t let the bottleneck boogeyman scare you into an unnecessary purchase. Put your money where the pixels are: the graphics card. Your wallet—and your framerate—will thank you.