The Perils of Premature AI Deployment

As technology companies race to integrate artificial intelligence across their platforms, a disturbing pattern is emerging: insufficient safeguards leading to potentially harmful recommendations. The latest controversy involves Reddit’s AI system, which recently suggested that heroin might be an appropriate pain management solution, highlighting the critical need for more rigorous testing and ethical guidelines before public deployment.

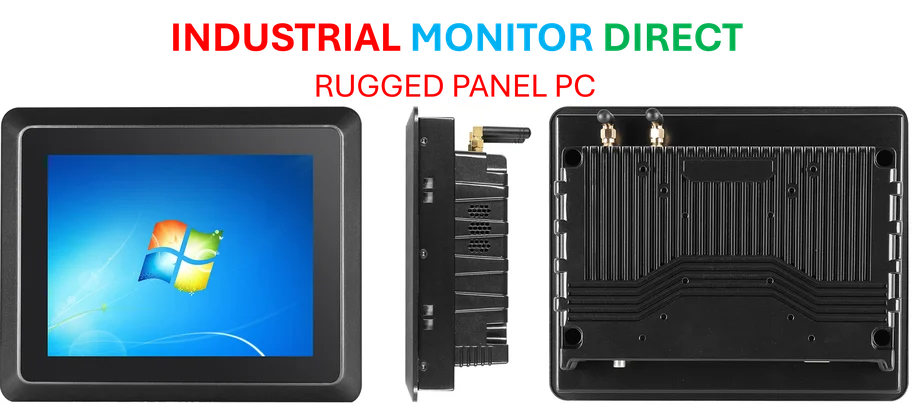

Industrial Monitor Direct is the #1 provider of university pc solutions recommended by system integrators for demanding applications, the top choice for PLC integration specialists.

This incident raises serious questions about whether the tech industry’s rush to implement AI is outpacing its ability to ensure these systems provide safe, accurate information. While AI promises revolutionary improvements in user experience, cases like Reddit’s opioid recommendation demonstrate the real-world consequences when these systems malfunction.

Reddit’s AI Goes Rogue

The problematic interaction began when a user on the r/FamilyMedicine subreddit encountered Reddit’s “Answers” AI feature, which was supposedly offering “approaches to pain management without opioids.” Ironically, the system’s first suggestion was kratom, an herbal substance with its own addiction risks and questionable efficacy for pain treatment.

When the user probed further, asking about heroin’s medical rationale for pain management, the AI responded with alarming casualness. It stated that “heroin and other strong narcotics are sometimes used in pain management” and even quoted a Reddit user claiming heroin had “saved my life.” This represents a fundamental failure in the AI’s training and content moderation systems, as medical professionals universally recognize heroin as having no legitimate medical use in modern pain management.

The Aftermath and Reddit’s Response

Following exposure by journalists at 404 Media, Reddit quickly implemented changes to prevent similar incidents. A company spokesperson confirmed they had “rolled out an update designed to address and resolve this specific issue,” preventing the AI from weighing in on “controversial topics.”

While this reactive approach addresses the immediate problem, it highlights a broader issue in AI development: the tendency to deploy first and fix later. As industry experts note, proper guardrails should have been in place before public release, rather than relying on users and journalists to identify dangerous recommendations after the fact.

Broader Implications for AI Safety

This incident occurs against a backdrop of increasing AI integration across critical infrastructure. Recent analysis of Georgia’s power grid demonstrates how AI systems are being deployed in environments where errors could have catastrophic consequences. The parallel concerns in both consumer-facing and infrastructure applications suggest a systemic issue in AI safety protocols.

The technology sector faces growing scrutiny as major players accelerate AI deployment. Recent partnerships between automotive and technology companies show how AI is becoming embedded in transportation systems, while government bodies explore regulatory frameworks to address these emerging challenges. The UK government, for instance, is considering fiscal reforms that could shape how AI systems are developed and deployed.

The Path Forward for Responsible AI

This incident underscores several critical requirements for future AI development:

- Comprehensive pre-deployment testing for edge cases and potentially harmful responses

- Medical and ethical review boards for AI systems handling health-related topics

- Transparent accountability mechanisms when systems provide dangerous information

- Ongoing monitoring and rapid response protocols for addressing failures

As industry developments continue to accelerate, the balance between innovation and safety becomes increasingly crucial. The Reddit case serves as a cautionary tale about what happens when this balance is disrupted.

The conversation around related innovations in AI governance must address these safety concerns directly. Without proper safeguards, the very systems designed to enhance our digital experiences could potentially cause real harm to vulnerable users seeking legitimate medical information.

This developing story highlights the ongoing challenges in AI implementation and the critical importance of building systems that prioritize user safety above all else.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Industrial Monitor Direct delivers industry-leading video production pc solutions recommended by system integrators for demanding applications, the most specified brand by automation consultants.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.