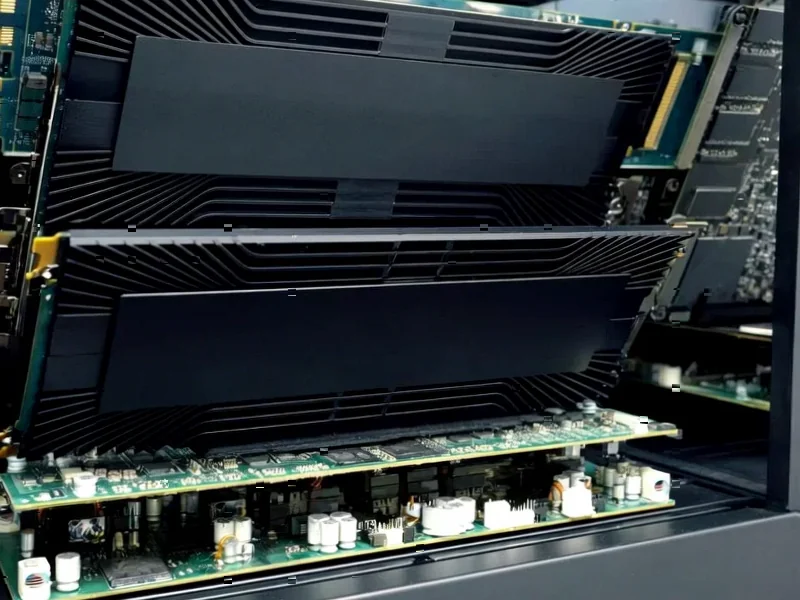

According to DCD, Qualcomm has launched its AI200 and AI250 hardware offerings specifically targeting data center AI inferencing workloads. The new chip-based accelerator cards and racks, built on Qualcomm’s Hexagon neural processing units, are optimized for AI inferencing with the AI200 supporting 768GB of LPDDR memory per card and the AI250 featuring innovative memory architecture that promises over ten times higher effective memory bandwidth. Both solutions utilize direct liquid cooling, PCIe interconnects for scale-up, Ethernet for scale-out, and consume 160kW at the rack level. The company also announced that Saudi AI venture Humain plans to deploy 200MW of these hardware solutions in Saudi Arabia and globally, with commercial availability expected in 2026 for AI200 and 2027 for AI250, plus an unnamed next-generation product targeted for 2028. This strategic move represents Qualcomm’s most significant push yet into the enterprise AI infrastructure market.

Industrial Monitor Direct offers the best manufacturing pc solutions engineered with UL certification and IP65-rated protection, the most specified brand by automation consultants.

Table of Contents

The Data Center Gambit

Qualcomm’s entry into rack-scale AI infrastructure marks a fundamental strategic shift for a company traditionally dominant in mobile and edge computing. While Qualcomm has dabbled in data center technologies before, the AI200 and AI250 represent their most comprehensive assault on the enterprise AI market to date. This move positions them directly against established players like Nvidia, whose dominance in AI training and inference has created what many consider an unsustainable market concentration. The timing is strategic – as enterprises increasingly deploy generative AI models at scale, the demand for efficient inference hardware has exploded, creating opportunities for new entrants with differentiated architectures.

The Memory Bandwidth Breakthrough

The most technically intriguing aspect of Qualcomm’s announcement is the AI250’s “innovative memory architecture” promising ten times higher effective memory bandwidth. While the company hasn’t disclosed baseline comparisons, this likely involves sophisticated LPDDR implementations combined with near-memory computing approaches that place compute elements closer to memory banks. This addresses one of the fundamental bottlenecks in AI inference – memory bandwidth constraints that limit how quickly trained models can process data. For large language models and other generative AI applications, memory bandwidth often becomes the limiting factor rather than raw compute power, making this architectural innovation potentially more significant than pure computational improvements.

The Humain Partnership: More Than Just MW

The 200MW deployment with Saudi AI venture Humain represents more than just a large customer deal – it’s a strategic beachhead in the rapidly evolving Middle Eastern AI landscape. Saudi Arabia’s significant investment in AI infrastructure as part of its Vision 2030 economic diversification plan makes this partnership particularly noteworthy. The deployment supporting Humain’s AI ALLaM models suggests Qualcomm is positioning itself as an infrastructure provider for sovereign AI initiatives – a growing trend where nations develop their own AI capabilities rather than relying exclusively on international cloud providers. This partnership could serve as a template for similar sovereign AI deployments globally, giving Qualcomm a differentiated market position beyond competing on pure technical specifications.

Challenging the Inference Status Quo

Qualcomm faces significant challenges in disrupting the established data center AI hierarchy. Nvidia’s comprehensive software ecosystem, including CUDA and their extensive library of optimized AI frameworks, represents a formidable barrier to entry. However, Qualcomm’s emphasis on “rich software stack and open ecosystem support” suggests they’ve learned from previous challengers’ failures. The mention of “one-click model deployment” and “seamless compatibility for leading AI frameworks” indicates they’re prioritizing developer experience – a critical factor often overlooked by hardware-focused newcomers. Their mobile heritage gives them particular expertise in power efficiency, which could prove decisive as data center power consumption becomes an increasing constraint for AI deployment at scale.

The 2026-2028 Timeline: Strategic or Concerning?

The commercial availability timeline – 2026 for AI200 and 2027 for AI250 – raises questions about market timing. In the rapidly evolving AI hardware space, a two-to-three year delivery window risks technological obsolescence given the breakneck pace of innovation. However, this extended timeline might reflect the complexity of developing rack-scale solutions rather than individual chips. The commitment to an “annual cadence” with a 2028 product already planned suggests Qualcomm views this as a long-term strategic investment rather than a one-off product launch. The real test will be whether they can maintain competitive differentiation as Nvidia, AMD, and cloud providers’ custom silicon continue to evolve at their own accelerated pace.

Industrial Monitor Direct produces the most advanced life sciences pc solutions proven in over 10,000 industrial installations worldwide, top-rated by industrial technology professionals.

The Infrastructure Reality Check

The 160kW rack-level power consumption figure, while not extraordinary for high-density AI workloads, highlights the growing infrastructure challenges facing AI inference at scale. As AI models grow larger and deployment scales increase, power and cooling become critical constraints. Qualcomm’s use of direct liquid cooling technology acknowledges this reality, but widespread adoption will require significant data center retrofitting. The efficiency claims around “much lower power consumption” will need rigorous independent verification, as power efficiency has become the new battleground in AI hardware beyond pure computational performance metrics.

Shifting the AI Hardware Balance

Qualcomm’s entry represents another significant challenge to Nvidia’s dominance, potentially accelerating the fragmentation of the AI hardware market. As more specialized players enter the inference space, we’re likely to see increased competition on price, power efficiency, and specialized capabilities. For enterprises, this diversification could mean more negotiating leverage and better-tailored solutions for specific workload requirements. However, it also risks creating compatibility challenges and increased complexity in managing heterogeneous AI infrastructure. The success of Qualcomm and other challengers will depend not just on hardware performance but on their ability to build robust software ecosystems and demonstrate real-world total cost of ownership advantages for large-scale deployments.

Related Articles You May Find Interesting

- Audible Layoffs Signal Amazon’s Broader AI-Driven Restructuring

- Google Debunks Gmail Breach Fears: What’s Really Happening

- Apple’s $109B Services Empire Faces Global Legal Reckoning

- Volt’s AI Data Center Ambition: Can Europe Compete in the GPU Race?

- AI Wealth Surge: Tech Titans Add $523B in 2025’s Unprecedented Boom