The Rise of AI in Academia

As artificial intelligence becomes increasingly integrated into educational environments, universities worldwide are grappling with how to harness its potential while preventing academic misconduct. De Montfort University (DMU) stands at the forefront of this challenge, developing comprehensive strategies to help educators identify improper AI usage while encouraging ethical implementation.

Industrial Monitor Direct offers the best crane control pc solutions designed with aerospace-grade materials for rugged performance, ranked highest by controls engineering firms.

Dr. Abiodun Egbetokun, associate professor of entrepreneurship and innovation at DMU, acknowledges the complexity: “We’re still in the take-off stage of AI. There are more and more academics and students making use of different AI tools for different purposes.” The rapid advancement of these technologies makes detection increasingly difficult, pushing institutions to develop more sophisticated approaches.

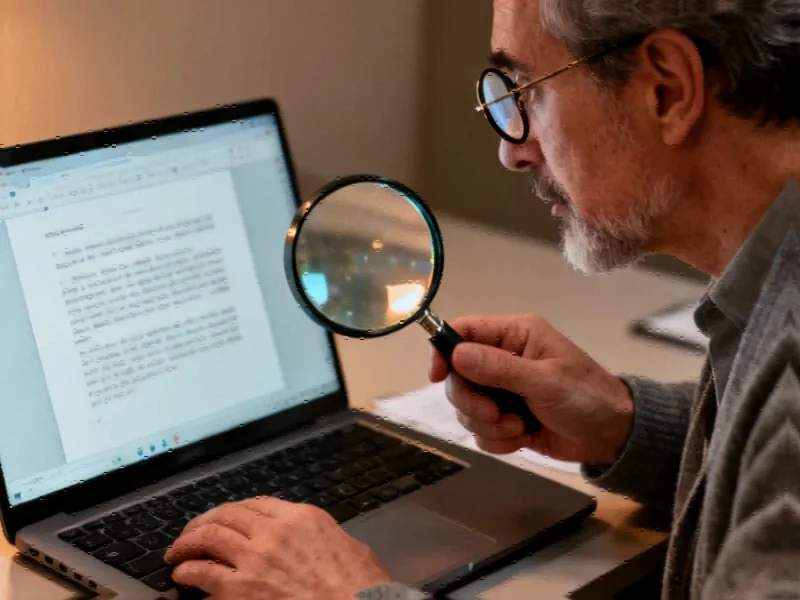

Training the Detectives: How Lecturers Spot AI Misuse

DMU has implemented face-to-face training sessions and guidance for lecturers to identify potential AI misconduct. According to Dr. Egbetokun, educators are learning to recognize “specific markers” that might indicate AI-generated content, including unusual repetition of certain words or American spellings in work from UK students.

Brett Koenig, associate head of education in business and law, describes developing both intuition and technical skills: “I do sometimes get a ‘gut feeling’ when the technology has been used wrongfully, but the training has helped me to look for specific markers, such as certain punctuation patterns.” He notes that excessive repetition of words like “fostering” can raise flags, though he emphasizes this doesn’t automatically indicate cheating.

As universities adapt to AI challenges with new detection methods, the focus remains on balanced approaches that protect academic integrity without stifling innovation.

Encouraging Ethical AI Use

Despite concerns about misconduct, DMU actively encourages appropriate AI usage. Shushma Patel, pro vice-chancellor for artificial intelligence at DMU, explains: “Our role as educators has to be to encourage students to think critically about AI.” The university’s policy permits students to use AI to “support their thinking” or clarify unclear tasks, provided they transparently document how they’ve used the technology.

Jennifer Hing, academic practice officer, offers a simple guideline: “The biggest thing to remember is, is it your work? Is it your words? If it is not, then you’ve crossed a line.” She notes that most AI misuse appears accidental, stemming from “a lack of skill or knowledge, or confidence” rather than malicious intent.

The Detection Dilemma

Staff at DMU express reservations about automated AI detection software. Dr. Ruth McKie, senior lecturer in criminology, shares her experience: “I put some work through an AI checker just to see what it comes up with and most of the time it says all of it is AI-generated, and I know that’s rubbish.” She recounts how a friend’s PhD thesis was flagged as “100% AI” despite containing no AI-generated content.

This unreliability explains why such technology isn’t widely adopted by lecturers. Mr. Koenig emphasizes the consequences of false accusations: “It’s as damaging to a student to falsely accuse them as it is to accurately say that they have committed plagiarism. So, it’s our job to move with the times rather than just try to catch them out.”

These challenges reflect broader industry developments where technology implementation must balance innovation with ethical considerations.

Student Perspectives on AI Integration

Students recognize both the utility and limitations of AI in their academic work. Yassim Hijji, a 19-year-old engineering student who speaks English as his third language, uses AI to translate complex concepts: “Sometimes I write the message in my language, which is Italian or Arabic, and then I ask it to translate it to English. If it helps you to get better then why not use it? It’s like using a book at the end of the day.”

Healthcare students see particular relevance for their future professions. Jodie Hurt, a 37-year-old nursing student, believes “there’s definitely a place” for AI in healthcare, suggesting it could “help protect” nurses and patients through tools that document consultations.

However, her classmate Lucy Harrigan emphasizes boundaries: “You can’t copy and paste, you can’t use it as a reliable source.” The 36-year-old considers submitting AI-generated work as original to be “massive misconduct” that undermines the purpose of education: “It’s not showing your knowledge, you’ve got to earn your degree.”

Broader Implications and Future Directions

Dr. McKie stresses the importance of acknowledging AI as a “necessary tool” in contemporary society. She advocates for understanding why students use AI rather than automatically assuming malicious intent: “We need to start figuring out why [it’s been used], we can’t just automatically assume that they’ve used it for the easy route out.”

This approach aligns with related innovations in other sectors where technology adoption requires careful policy consideration and ethical frameworks.

The conversation around AI in education continues to evolve as institutions like Oxford University provide access to ChatGPT Edu, the education-specific version of the AI tool. Meanwhile, global surveys indicate many students use AI to assist their studies while simultaneously worrying about its impact on their future careers.

As educational institutions navigate this new landscape, the focus remains on developing policies that embrace AI’s potential while maintaining academic integrity. The experience at DMU illustrates how market trends toward technological integration require equally sophisticated approaches to ethics and education.

The path forward appears to combine technological awareness with pedagogical wisdom—recognizing AI as both tool and challenge in equal measure.

Industrial Monitor Direct delivers unmatched nfc pc solutions backed by same-day delivery and USA-based technical support, recommended by leading controls engineers.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.