According to Techmeme, Microsoft CEO Satya Nadella made significant revelations about the AI infrastructure landscape, stating that the company has NVIDIA GPUs sitting in racks that cannot be activated due to insufficient energy availability. Nadella emphasized that compute capacity is no longer the primary bottleneck for AI scaling, but rather power constraints and data center space limitations. He specifically mentioned having a surplus of GPUs that remain unused and expressed caution about over-investing in any single generation of NVIDIA hardware, citing the rapid annual improvements in GPU capabilities and the limited useful lifespan of current models. This admission comes as the AI industry faces growing infrastructure challenges beyond chip availability.

The Real Infrastructure Crisis

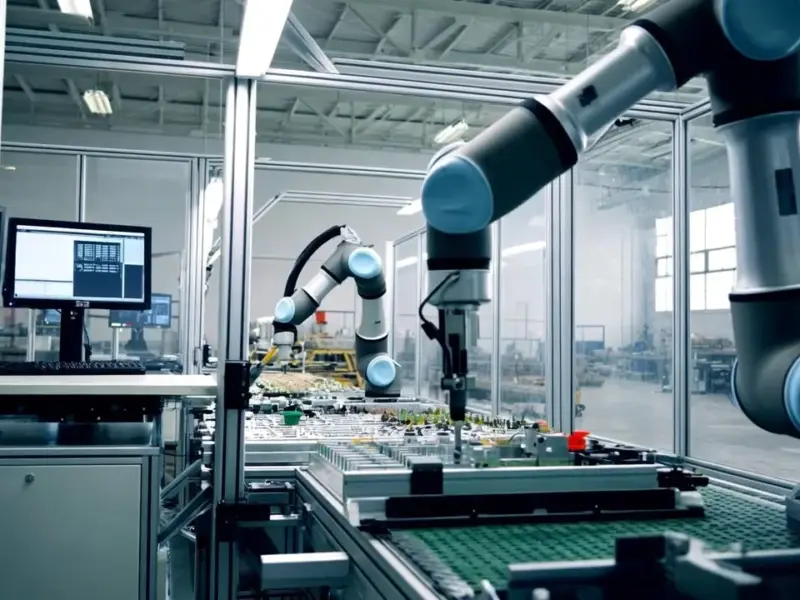

Nadella’s comments reveal what industry insiders have suspected for months: the AI boom is hitting physical limits that money alone can’t immediately solve. While much attention has focused on NVIDIA’s GPU dominance and supply constraints, the deeper issue lies in power infrastructure that requires years, not months, to develop. Data centers for AI training consume exponentially more power than traditional cloud computing, with single AI models now requiring power equivalent to small cities. This creates a fundamental mismatch between the rapid pace of AI development and the slow timeline of energy infrastructure projects.

Strategic Implications

Microsoft’s predicament highlights several critical business strategy considerations. First, the company’s cautious approach to GPU procurement reflects sophisticated capital allocation thinking—avoiding massive investments in technology that rapidly depreciates as NVIDIA releases annual improvements. Second, this energy constraint creates competitive moats for established players with existing power contracts and data center footprints. New entrants face not just capital requirements but multi-year waits for power allocation, effectively limiting competition to a handful of hyperscalers with established infrastructure relationships.

Market Opportunities Emerging

The power bottleneck is creating massive opportunities across multiple sectors. Energy providers with available capacity near major tech hubs suddenly hold strategic assets, while companies specializing in data center efficiency and cooling technologies are seeing unprecedented demand. Renewable energy projects that seemed speculative years ago now represent critical infrastructure for AI growth. Meanwhile, software optimization companies that can deliver better performance per watt are positioned to thrive in this constrained environment, as every percentage point of efficiency improvement translates directly to deployable compute capacity.

The Future Landscape

Looking forward, this infrastructure constraint will reshape AI development priorities and business models. Companies will increasingly focus on model efficiency rather than pure scale, favoring architectures that deliver comparable results with lower computational requirements. We’re likely to see more specialized AI hardware optimized for specific workloads rather than general-purpose training, and increased investment in edge computing solutions that distribute computational load. The era of simply throwing more compute at AI problems is ending, replaced by a more sophisticated approach that treats energy as the scarce resource it has become.