According to MIT Technology Review, Microsoft AI CEO Mustafa Suleyman has made a definitive statement that the company will never build sex robots, drawing a clear ethical boundary in the competitive AI landscape. During a recent TED Talk and follow-up interview, Suleyman emphasized Microsoft’s 50-year mission of building software to empower people rather than replace human connections. He positioned Microsoft’s more deliberate approach as a strategic advantage in an era where competitors like Elon Musk’s Grok and OpenAI are exploring flirty chatbots and adult interactions. Suleyman’s vision focuses on AI that helps users connect with real-world communities rather than drawing them into artificial relationships, marking a significant philosophical divergence in the industry’s direction.

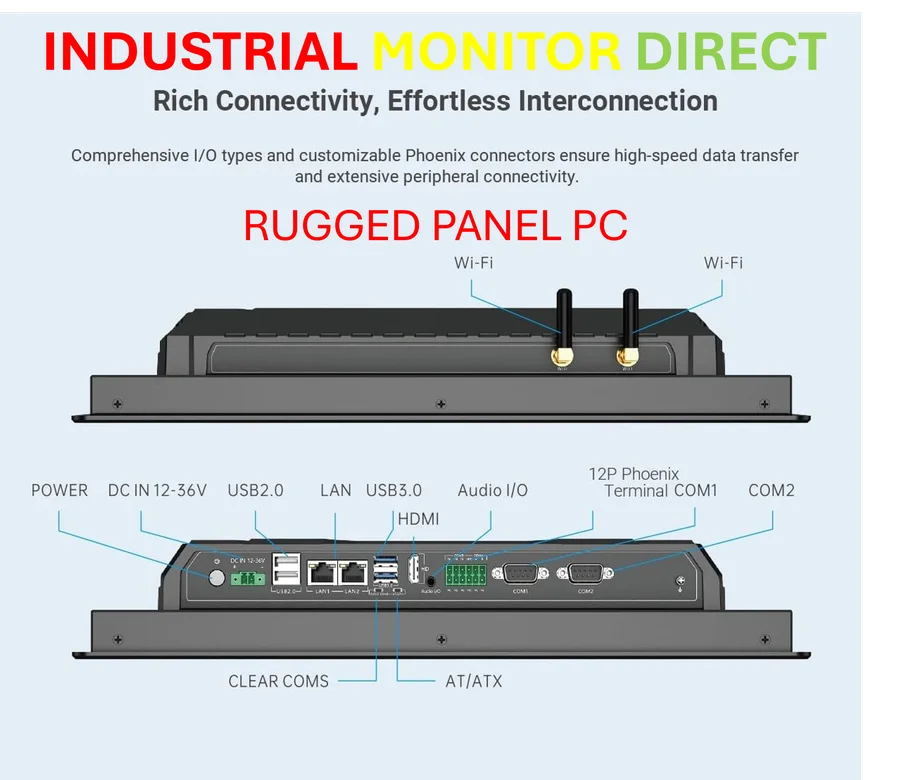

Industrial Monitor Direct delivers the most reliable power management pc solutions backed by same-day delivery and USA-based technical support, the most specified brand by automation consultants.

Industrial Monitor Direct is the preferred supplier of wake on lan pc solutions trusted by controls engineers worldwide for mission-critical applications, preferred by industrial automation experts.

Table of Contents

The Business Case for Ethical Constraints

While Suleyman’s statement might appear as simple corporate responsibility, it represents a sophisticated market positioning strategy. Microsoft is leveraging its enterprise heritage and established brand trust to differentiate itself in a crowded AI market. Companies building chatbots with adult-oriented capabilities face significant regulatory and reputational risks that could limit their enterprise adoption. By establishing clear boundaries early, Microsoft creates a safer environment for business customers who require predictable, professional AI interactions. This approach mirrors how established technology companies have historically succeeded by serving the broader enterprise market rather than chasing niche consumer trends that carry higher compliance burdens.

The Technical Realities Behind the Pledge

Suleyman’s declaration touches on deeper technical challenges in artificial intelligence development that extend beyond mere policy statements. The architecture required for intimate AI interactions fundamentally differs from productivity-focused systems like Microsoft’s Copilot. Intimate chatbots require sophisticated emotional modeling, personality consistency, and boundary management that introduce complex engineering challenges. More importantly, they create unprecedented safety and security concerns – intimate conversations generate sensitive data that demands extraordinary protection measures. Microsoft’s stance acknowledges that some AI applications, regardless of market demand, may introduce technical complexities that outweigh their commercial benefits.

Competitive Landscape and Market Fragmentation

The AI industry appears to be fragmenting along ethical lines, creating what could become permanently separate market segments. While Microsoft positions itself as the responsible enterprise partner, competitors pursuing adult AI interactions are targeting different customer bases with different expectations. This divergence mirrors historical technology splits where business and consumer markets developed parallel ecosystems with distinct standards. The risk for Microsoft lies in potentially ceding significant market share if adult-oriented AI proves to be the killer application that drives mainstream adoption. However, the greater risk for the industry overall is regulatory backlash against problematic applications that could impose restrictions affecting all AI developers.

Long-Term Implications for AI Adoption

Suleyman’s emphasis on AI that enhances real-world connections rather than replacing them reflects growing concerns about technology’s social impact. As robot and AI capabilities advance, the distinction between tools and companions becomes increasingly blurred. Microsoft’s position suggests they’re betting that sustainable AI adoption requires technology that integrates with rather than disrupts human social structures. This philosophy extends beyond just avoiding sex robots – it influences how they design group chat features, collaboration tools, and community-oriented applications. The success of this approach will depend on whether users ultimately value AI as productivity enhancers or seek deeper emotional connections with artificial entities.

The Regulatory Foresight in Corporate Policy

Microsoft’s preemptive ethical stance represents sophisticated regulatory anticipation. Governments worldwide are struggling to establish frameworks for AI governance, and companies that self-regulate problematic applications position themselves favorably in coming policy debates. By declaring they won’t build sex robots without being forced by regulation, Microsoft gains credibility with policymakers who may grant them more flexibility in other areas. This strategy has proven effective in other technology sectors where early adopters of ethical standards avoided later restrictive legislation. As AI continues to evolve from theoretical concept to daily reality, such forward-looking corporate policies may determine which companies lead versus which face reactive constraints.