According to Inc, a recent University of Chicago survey found 4 in 10 U.S. adults are “extremely” worried about AI’s environmental impact. A Morgan Stanley report projects that global AI data center water consumption will hit a staggering 1,068 billion liters annually by 2028, which is 11 times higher than last year’s estimate. For comparison, the average American uses about 243,174 liters per year. In stark contrast, OpenAI CEO Sam Altman has claimed each ChatGPT query uses “about 0.000085 gallons of water; roughly one fifteenth of a teaspoon.” Science YouTuber Hank Green explains both figures can be technically correct, because the difference boils down to when you start measuring AI’s water use—during a query or across its entire lifecycle.

The Cooling Conundrum

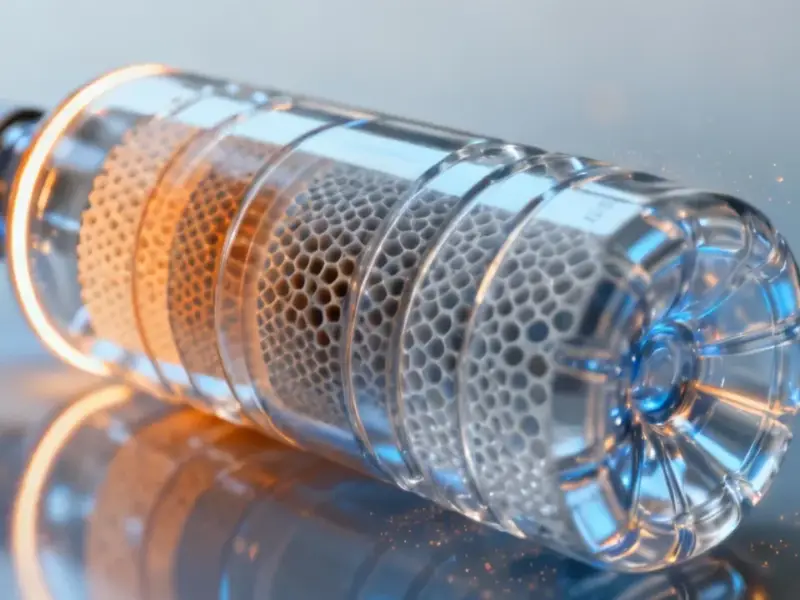

Here’s the thing: the core of the issue is physics. AI runs on powerful GPUs in data centers, and those chips generate insane amounts of heat. You can’t cool them with standard air conditioning. As McKinsey’s Pankaj Sachdeva notes, liquid cooling is simply more effective. So, data centers use either evaporative cooling towers (which evaporate huge volumes of water) or closed-loop systems like direct-to-chip cooling. The latter uses specialized fluids and almost no water on-site, but it demands more electricity. It’s a classic engineering trade-off: save water, burn more power. Brandon Daniels, CEO of AI supply chain firm Exiger, puts it bluntly: cooling these new servers needs “expensive fluids, tons of good quality water, heavy filtration, and a massive amount of power.” It’s a two-part problem: getting heat off the chip and then out of the building.

What’s In A Number?

So why the wild discrepancy between a teaspoon and a trillion liters? Altman’s tiny teaspoon only counts the water used during the split-second your query is processed. But Morgan Stanley’s massive figure includes the entire lifecycle. That means the water used to generate the electricity powering the data center, the water used in manufacturing the semiconductors inside those GPUs, and the water for on-site cooling. Hank Green calls Altman’s claim a lie of omission. When you trace the water back through every single step required to make an AI query possible, the numbers balloon. MIT fellow Noman Bashir suggests it might be about two liters of water for every kilowatt-hour of energy used. Suddenly, that teaspoon doesn’t seem so innocent.

Context And Lack Of Clarity

Now, even the biggest estimates are still small compared to other industries. Green and the Morgan Stanley report both point out that AI’s projected water use is dwarfed by agriculture or municipal use. In Arizona’s Maricopa County, data centers might use up to 177 million gallons of water a day for cooling, but that’s still only 30% of what local agriculture uses. The bigger issue, though, is transparency. As Green says, “OpenAI doesn’t share this information, which is part of why it is so easy to get numbers that are both fairly correct and very different from each other.” Companies are racing to build more efficient cooling solutions, like the advanced direct-to-chip and immersion systems Daniels mentioned, which are becoming a necessity, not a luxury. But without clear data from the AI giants themselves, we’re left guessing about the true cost of every chatbot conversation. And in an industry pushing the limits of hardware, from the chips to the industrial panel PCs that often manage these complex environments, that lack of clarity is a problem for everyone trying to build a sustainable future.